The argument and thought-experiment now generally known as the Chinese Room Argument was first published in a 1980 article by American philosopher John Searle (1932– ). It has become one of the best-known arguments in recent philosophy. Searle imagines himself alone in a room following a computer program for responding to Chinese characters slipped under the door. Searle understands nothing of Chinese, and yet, by following the program for manipulating symbols and numerals just as a computer does, he sends appropriate strings of Chinese characters back out under the door, and this leads those outside to mistakenly suppose there is a Chinese speaker in the room.

The narrow conclusion of the argument is that programming a digital computer may make it appear to understand language but could not produce real understanding. Hence the “Turing Test” is inadequate. Searle argues that the thought experiment underscores the fact that computers merely use syntactic rules to manipulate symbol strings, but have no understanding of meaning or semantics. The broader conclusion of the argument is that the theory that human minds are computer-like computational or information processing systems is refuted. Instead minds must result from biological processes; computers can at best simulate these biological processes. Thus the argument has large implications for semantics, philosophy of language and mind, theories of consciousness, computer science and cognitive science generally. As a result, there have been many critical replies to the argument.

- 1. Overview

- 2. Historical Background

- 2.1 Leibniz’ Mill

- 2.2 Turing’s Paper Machine

- 2.3 The Chinese Nation

- 3. The Chinese Room Argument

- 4. Replies to the Chinese Room Argument

- 4.1 The Systems Reply

- 4.2 The Robot Reply

- 4.3 The Brain Simulator Reply

- 4.4 The Other Minds Reply

- 4.5 The Intuition Reply

- 5. The Larger Philosophical Issues

- 5.1 Syntax and Semantics

- 5.2 Intentionality

- 5.3 Mind and Body

- 5.4 Simulation, duplication and evolution

- Conclusion

- Bibliography

- Academic Tools

- Other Internet Resources

1. Overview

Work in Artificial Intelligence (AI) has produced computer programs that can beat the world chess champion, control autonomous vehicles, complete our email sentences, and defeat the best human players on the television quiz show Jeopardy. AI has also produced programs with which one can converse in natural language, including customer service “virtual agents”, and Amazon’s Alexa and Apple’s Siri. Our experience shows that playing chess or Jeopardy, and carrying on a conversation, are activities that require understanding and intelligence. Does computer prowess at conversation and challenging games then show that computers can understand language and be intelligent? Will further development result in digital computers that fully match or even exceed human intelligence? Alan Turing (1950), one of the pioneer theoreticians of computing, believed the answer to these questions was “yes”. Turing proposed what is now known as ‘The Turing Test’: if a computer can pass for human in online chat, we should grant that it is intelligent. By the late 1970s some AI researchers claimed that computers already understood at least some natural language. In 1980 U.C. Berkeley philosopher John Searle introduced a short and widely-discussed argument intended to show conclusively that it is impossible for digital computers to understand language or think.

Searle argues that a good way to test a theory of mind, say a theory that holds that understanding can be created by doing such and such, is to imagine what it would be like to actually do what the theory says will create understanding. Searle (1999) summarized his Chinese Room Argument (herinafter, CRA) concisely:

Imagine a native English speaker who knows no Chinese locked in a room full of boxes of Chinese symbols (a data base) together with a book of instructions for manipulating the symbols (the program). Imagine that people outside the room send in other Chinese symbols which, unknown to the person in the room, are questions in Chinese (the input). And imagine that by following the instructions in the program the man in the room is able to pass out Chinese symbols which are correct answers to the questions (the output). The program enables the person in the room to pass the Turing Test for understanding Chinese but he does not understand a word of Chinese.

Searle goes on to say, “The point of the argument is this: if the man in the room does not understand Chinese on the basis of implementing the appropriate program for understanding Chinese then neither does any other digital computer solely on that basis because no computer, qua computer, has anything the man does not have.”

Thirty years after introducing the CRA Searle 2010 describes the conclusion in terms of consciousness and intentionality:

I demonstrated years ago with the so-called Chinese Room Argument that the implementation of the computer program is not by itself sufficient for consciousness or intentionality (Searle 1980). Computation is defined purely formally or syntactically, whereas minds have actual mental or semantic contents, and we cannot get from syntactical to the semantic just by having the syntactical operations and nothing else. To put this point slightly more technically, the notion “same implemented program” defines an equivalence class that is specified independently of any specific physical realization. But such a specification necessarily leaves out the biologically specific powers of the brain to cause cognitive processes. A system, me, for example, would not acquire an understanding of Chinese just by going through the steps of a computer program that simulated the behavior of a Chinese speaker (p.17).

“Intentionality” is a technical term for a feature of mental and certain other things, namely being about something. Thus a desire for a piece of chocolate and thoughts about real Manhattan or fictional Harry Potter all display intentionality, as will be discussed in more detail in section 5.2 below.

Searle’s shift from machine understanding to consciousness and intentionality is not directly supported by the original 1980 argument. However the re-description of the conclusion indicates the close connection between understanding and consciousness in Searle’s later accounts of meaning and intentionality. Those who don’t accept Searle’s linking account might hold that running a program can create understanding without necessarily creating consciousness, and conversely a fancy robot might have dog level consciousness, desires, and beliefs, without necessarily understanding natural language.

In moving to discussion of intentionality Searle seeks to develop the broader implications of his argument. It aims to refute the functionalist approach to understanding minds, that is, the approach that holds that mental states are defined by their causal roles, not by the stuff (neurons, transistors) that plays those roles. The argument counts especially against that form of functionalism known as the Computational Theory of Mind that treats minds as information processing systems. As a result of its scope, as well as Searle’s clear and forceful writing style, the Chinese Room argument has probably been the most widely discussed philosophical argument in cognitive science to appear since the Turing Test. By 1991 computer scientist Pat Hayes had defined Cognitive Science as the ongoing research project of refuting Searle’s argument. Cognitive psychologist Steven Pinker (1997) pointed out that by the mid-1990s well over 100 articles had been published on Searle’s thought experiment – and that discussion of it was so pervasive on the Internet that Pinker found it a compelling reason to remove his name from all Internet discussion lists.

This interest has not subsided, and the range of connections with the argument has broadened. A search on Google Scholar for “Searle Chinese Room” limited to the period from 2010 through 2019 produced over 2000 results, including papers making connections between the argument and topics ranging from embodied cognition to theater to talk psychotherapy to postmodern views of truth and “our post-human future” – as well as discussions of group or collective minds and discussions of the role of intuitions in philosophy. In 2007 a game company took the name “The Chinese Room” in joking honor of “...Searle’s critique of AI – that you could create a system that gave the impression of intelligence without any actual internal smarts.” This wide-range of discussion and implications is a tribute to the argument’s simple clarity and centrality.

2. Historical Background

2.1 Leibniz’ Mill

Searle’s argument has four important antecedents. The first of these is an argument set out by the philosopher and mathematician Gottfried Leibniz (1646–1716). This argument, often known as “Leibniz’ Mill”, appears as section 17 of Leibniz’ Monadology. Like Searle’s argument, Leibniz’ argument takes the form of a thought experiment. Leibniz asks us to imagine a physical system, a machine, that behaves in such a way that it supposedly thinks and has experiences (“perception”).

17. Moreover, it must be confessed that perception and that which depends upon it are inexplicable on mechanical grounds, that is to say, by means of figures and motions. And supposing there were a machine, so constructed as to think, feel, and have perception, it might be conceived as increased in size, while keeping the same proportions, so that one might go into it as into a mill. That being so, we should, on examining its interior, find only parts which work one upon another, and never anything by which to explain a perception. Thus it is in a simple substance, and not in a compound or in a machine, that perception must be sought for. [Robert Latta translation]

Notice that Leibniz’s strategy here is to contrast the overt behavior of the machine, which might appear to be the product of conscious thought, with the way the machine operates internally. He points out that these internal mechanical operations are just parts moving from point to point, hence there is nothing that is conscious or that can explain thinking, feeling or perceiving. For Leibniz physical states are not sufficient for, nor constitutive of, mental states.

2.2 Turing’s Paper Machine

A second antecedent to the Chinese Room argument is the idea of a paper machine, a computer implemented by a human. This idea is found in the work of Alan Turing, for example in “Intelligent Machinery” (1948). Turing writes there that he wrote a program for a “paper machine” to play chess. A paper machine is a kind of program, a series of simple steps like a computer program, but written in natural language (e.g., English), and implemented by a human. The human operator of the paper chess-playing machine need not (otherwise) know how to play chess. All the operator does is follow the instructions for generating moves on the chess board. In fact, the operator need not even know that he or she is involved in playing chess – the input and output strings, such as “N–QB7” need mean nothing to the operator of the paper machine.

As part of the WWII project to decipher German military encryption, Turing had written English-language programs for human “computers”, as these specialized workers were then known, and these human computers did not need to know what the programs that they implemented were doing.

One reason the idea of a human-plus-paper machine is important is that it already raises questions about agency and understanding similar to those in the CRA. Suppose I am alone in a closed room and follow an instruction book for manipulating strings of symbols. I thereby implement a paper machine that generates symbol strings such as “N-KB3” that I write on pieces of paper and slip under the door to someone outside the room. Suppose further that prior to going into the room I don’t know how to play chess, or even that there is such a game. However, unbeknownst to me, in the room I am running Turing’s chess program and the symbol strings I generate are chess notation and are taken as chess moves by those outside the room. They reply by sliding the symbols for their own moves back under the door into the room. If all you see is the resulting sequence of moves displayed on a chess board outside the room, you might think that someone in the room knows how to play chess very well. Do I now know how to play chess? Or is it the system (consisting of me, the manuals, and the paper on which I manipulate strings of symbols) that is playing chess? If I memorize the program and do the symbol manipulations inside my head, do I then know how to play chess, albeit with an odd phenomenology? Does someone’s conscious states matter for whether or not they know how to play chess? If a digital computer implements the same program, does the computer then play chess, or merely simulate this?

By mid-century Turing was optimistic that the newly developed electronic computers themselves would soon be able to exhibit apparently intelligent behavior, answering questions posed in English and carrying on conversations. Turing (1950) proposed what is now known as the Turing Test: if a computer could pass for human in on-line chat, it should be counted as intelligent.

A third antecedent of Searle’s argument was the work of Searle’s colleague at Berkeley, Hubert Dreyfus. Dreyfus was an early critic of the optimistic claims made by AI researchers. In 1965, when Dreyfus was at MIT, he published a circa hundred page report titled “Alchemy and Artificial Intelligence”. Dreyfus argued that key features of human mental life could not be captured by formal rules for manipulating symbols. Dreyfus moved to Berkeley in 1968 and in 1972 published his extended critique, “What Computers Can’t Do”. Dreyfus’ primary research interests were in Continental philosophy, with its focus on consciousness, intentionality, and the role of intuition and the unarticulated background in shaping our understandings. Dreyfus identified several problematic assumptions in AI, including the view that brains are like digital computers, and, again, the assumption that understanding can be codified as explicit rules.

However by the late 1970s, as computers became faster and less expensive, some in the burgeoning AI community started to claim that their programs could understand English sentences, using a database of background information. The work of one of these, Yale researcher Roger Schank (Schank & Abelson 1977) came to Searle’s attention. Schank developed a technique called “conceptual representation” that used “scripts” to represent conceptual relations (related to Conceptual Role Semantics). Searle’s argument was originally presented as a response to the claim that AI programs such as Schank’s literally understand the sentences that they respond to.

2.3 The Chinese Nation

A fourth antecedent to the Chinese Room argument are thought experiments involving myriad humans acting as a computer. In 1961 Anatoly Mickevich (pseudonym A. Dneprov) published “The Game”, a story in which a stadium full of 1400 math students are arranged to function as a digital computer (see Dneprov 1961 and the English translation listed at Mickevich 1961, Other Internet Resources). For 4 hours each repeatedly does a bit of calculation on binary numbers received from someone near them, then passes the binary result onto someone nearby. They learn the next day that they collectively translated a sentence from Portuguese into their native Russian. Mickevich’s protagonist concludes “We’ve proven that even the most perfect simulation of machine thinking is not the thinking process itself, which is a higher form of motion of living matter.” Apparently independently, a similar consideration emerged in early discussion of functionalist theories of minds and cognition (see further discussion in section 5.3 below), Functionalists hold that mental states are defined by the causal role they play in a system (just as a door stop is defined by what it does, not by what it is made out of). Critics of functionalism were quick to turn its proclaimed virtue of multiple realizability against it. While functionalism was consistent with a materialist or biological understanding of mental states (arguably a virtue), it did not identify types of mental states (such as experiencing pain, or wondering about OZ) with particular types of neurophysiological states, as “type-type identity theory” did. In contrast with type-type identity theory, functionalism allowed sentient beings with different physiology to have the same types of mental states as humans – pains, for example. But it was pointed out that if extraterrestrial aliens, with some other complex system in place of brains, could realize the functional properties that constituted mental states, then, presumably so could systems even less like human brains. The computational form of functionalism, which holds that the defining role of each mental state is its role in information processing or computation, is particularly vulnerable to this maneuver, since a wide variety of systems with simple components are computationally equivalent (see e.g., Maudlin 1989 for discussion of a computer built from buckets of water). Critics asked if it was really plausible that these inorganic systems could have mental states or feel pain.

Daniel Dennett (1978) reports that in 1974 Lawrence Davis gave a colloquium at MIT in which he presented one such unorthodox implementation. Dennett summarizes Davis’ thought experiment as follows:

Let a functionalist theory of pain (whatever its details) be instantiated by a system the subassemblies of which are not such things as C-fibers and reticular systems but telephone lines and offices staffed by people. Perhaps it is a giant robot controlled by an army of human beings that inhabit it. When the theory’s functionally characterized conditions for pain are now met we must say, if the theory is true, that the robot is in pain. That is, real pain, as real as our own, would exist in virtue of the perhaps disinterested and businesslike activities of these bureaucratic teams, executing their proper functions.

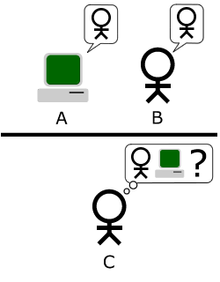

In “Troubles with Functionalism”, also published in 1978, Ned Block envisions the entire population of China implementing the functions of neurons in the brain. This scenario has subsequently been called “The Chinese Nation” or “The Chinese Gym”. We can suppose that every Chinese citizen would be given a call-list of phone numbers, and at a preset time on implementation day, designated “input” citizens would initiate the process by calling those on their call-list. When any citizen’s phone rang, he or she would then phone those on his or her list, who would in turn contact yet others. No phone message need be exchanged; all that is required is the pattern of calling. The call-lists would be constructed in such a way that the patterns of calls implemented the same patterns of activation that occur between neurons in someone’s brain when that person is in a mental state – pain, for example. The phone calls play the same functional role as neurons causing one another to fire. Block was primarily interested in qualia, and in particular, whether it is plausible to hold that the population of China might collectively be in pain, while no individual member of the population experienced any pain, but the thought experiment applies to any mental states and operations, including understanding language.

Thus Block’s precursor thought experiment, as with those of Davis and Dennett, is a system of many humans rather than one. The focus is on consciousness, but to the extent that Searle’s argument also involves consciousness, the thought experiment is closely related to Searle’s. Cole (1984) tries to pump intuitions in the reverse direction by setting out a thought experiment in which each of his neurons is itself conscious, and fully aware of its actions including being doused with neurotransmitters, undergoing action potentials, and squirting neurotransmitters at its neighbors. Cole argues that his conscious neurons would find it implausible that their collective activity produced a consciousness and other cognitive competences, including understanding English, that the neurons lack. Cole suggests the intuitions of implementing systems are not to be trusted.

3. The Chinese Room Argument

In 1980 John Searle published “Minds, Brains and Programs” in the journal The Behavioral and Brain Sciences. In this article, Searle sets out the argument, and then replies to the half-dozen main objections that had been raised during his earlier presentations at various university campuses (see next section). In addition, Searle’s article in BBS was published along with comments and criticisms by 27 cognitive science researchers. These 27 comments were followed by Searle’s replies to his critics.

In the decades following its publication, the Chinese Room argument was the subject of very many discussions. By 1984, Searle presented the Chinese Room argument in a book, Minds, Brains and Science. In January 1990, the popular periodical Scientific American took the debate to a general scientific audience. Searle included the Chinese Room Argument in his contribution, “Is the Brain’s Mind a Computer Program?”, and Searle’s piece was followed by a responding article, “Could a Machine Think?”, written by philosophers Paul and Patricia Churchland. Soon thereafter Searle had a published exchange about the Chinese Room with another leading philosopher, Jerry Fodor (in Rosenthal (ed.) 1991).

The heart of the argument is Searle imagining himself following an symbol processing program written in English (which is what Turing called “a paper machine”). The English speaker (Searle) sitting in the room follows English instructions for manipulating Chinese symbols, whereas a computer “follows” (in some sense) a program written in a computing language. The human produces the appearance of understanding Chinese by following the symbol manipulating instructions, but does not thereby come to understand Chinese. Since a computer just does what the human does – manipulate symbols on the basis of their syntax alone – no computer, merely by following a program, comes to genuinely understand Chinese.

This narrow argument, based closely on the Chinese Room scenario, is specifically directed at a position Searle calls “Strong AI”. Strong AI is the view that suitably programmed computers (or the programs themselves) can understand natural language and actually have other mental capabilities similar to the humans whose behavior they mimic. According to Strong AI, these computers really play chess intelligently, make clever moves, or understand language. By contrast, “weak AI” is the much more modest claim that computers are merely useful in psychology, linguistics, and other areas, in part because they can simulate mental abilities. But weak AI makes no claim that computers actually understand or are intelligent. The Chinese Room argument is not directed at weak AI, nor does it purport to show that no machine can think – Searle says that brains are machines, and brains think. The argument is directed at the view that formal computations on symbols can produce thought.

We might summarize the narrow argument as a reductio ad absurdum against Strong AI as follows. Let L be a natural language, and let us say that a “program for L” is a program for conversing fluently in L. A computing system is any system, human or otherwise, that can run a program.

- If Strong AI is true, then there is a program for Chinese such that if any computing system runs that program, that system thereby comes to understand Chinese.

- I could run a program for Chinese without thereby coming to understand Chinese.

- Therefore Strong AI is false.

The first premise elucidates the claim of Strong AI. The second premise is supported by the Chinese Room thought experiment. The conclusion of this narrow argument is that running a program cannot endow the system with language understanding. (There are other ways of understanding the structure of the argument. It may be relevant to understand some of the claims as counterfactual: e.g. “there is a program” in premise 1 as meaning there could be a program, etc. On this construal the argument involves modal logic, the logic of possibility and necessity (see Damper 2006 and Shaffer 2009)).

It is also worth noting that the first premise above attributes understanding to “the system”. Exactly what Strong-AI supposes will acquire understanding when the program runs is crucial to the success or failure of the CRA. Schank 1978 has a title that claims their group’s computer, a physical device, understands, but in the body of the paper he claims that the program [“SAM”] is doing the understanding: SAM, Schank says “...understands stories about domains about which it has knowledge” (p. 133). As we will see in the next section (4), these issues about the identity of the understander (the cpu? the program? the system? something else?) quickly came to the fore for critics of the CRA. Searle’s wider argument includes the claim that the thought experiment shows more generally that one cannot get semantics (meaning) from syntax (formal symbol manipulation). That and related issues are discussed in section 5: The Larger Philosophical Issues.

4. Replies to the Chinese Room Argument

Criticisms of the narrow Chinese Room argument against Strong AI have often followed three main lines, which can be distinguished by how much they concede:

(1) Some critics concede that the man in the room doesn’t understand Chinese, but hold that nevertheless running the program may create comprehension of Chinese by something other than the room operator. These critics object to the inference from the claim that the man in the room does not understand Chinese to the conclusion that no understanding has been created. There might be understanding by a larger, smaller, or different, entity. This is the strategy of The Systems Reply and the Virtual Mind Reply. These replies hold that the output of the room might reflect real understanding of Chinese, but the understanding would not be that of the room operator. Thus Searle’s claim that he doesn’t understand Chinese while running the room is conceded, but his claim that there is no understanding of the questions in Chinese, and that computationalism is false, is denied.

(2) Other critics concede Searle’s claim that just running a natural language processing program as described in the CR scenario does not create any understanding, whether by a human or a computer system. But these critics hold that a variation on the computer system could understand. The variant might be a computer embedded in a robotic body, having interaction with the physical world via sensors and motors (“The Robot Reply”), or it might be a system that simulated the detailed operation of an entire human brain, neuron by neuron (“the Brain Simulator Reply”).

(3) Finally, some critics do not concede even the narrow point against AI. These critics hold that the man in the original Chinese Room scenario might understand Chinese, despite Searle’s denials, or that the scenario is impossible. For example, critics have argued that our intuitions in such cases are unreliable. Other critics have held that it all depends on what one means by “understand” – points discussed in the section on The Intuition Reply. Others (e.g. Sprevak 2007) object to the assumption that any system (e.g. Searle in the room) can run any computer program. And finally some have argued that if it is not reasonable to attribute understanding on the basis of the behavior exhibited by the Chinese Room, then it would not be reasonable to attribute understanding to humans on the basis of similar behavioral evidence (Searle calls this last the “Other Minds Reply”). The objection is that we should be willing to attribute understanding in the Chinese Room on the basis of the overt behavior, just as we do with other humans (and some animals), and as we would do with extra-terrestrial Aliens (or burning bushes or angels) that spoke our language. This position is close to Turing’s own, when he proposed his behavioral test for machine intelligence.

In addition to these responses specifically to the Chinese Room scenario and the narrow argument to be discussed here, some critics also independently argue against Searle’s larger claim, and hold that one can get semantics (that is, meaning) from syntactic symbol manipulation, including the sort that takes place inside a digital computer, a question discussed in the section below on Syntax and Semantics.

4.1 The Systems Reply

In the original BBS article, Searle identified and discussed several responses to the argument that he had come across in giving the argument in talks at various places. As a result, these early responses have received the most attention in subsequent discussion. What Searle 1980 calls “perhaps the most common reply” is the Systems Reply.

The Systems Reply (which Searle says was originally associated with Yale, the home of Schank’s AI work) concedes that the man in the room does not understand Chinese. But, the reply continues, the man is but a part, a central processing unit (CPU), in a larger system. The larger system includes the huge database, the memory (scratchpads) containing intermediate states, and the instructions – the complete system that is required for answering the Chinese questions. So the Sytems Reply is that while the man running the program does not understand Chinese, the system as a whole does.

Ned Block was one of the first to press the Systems Reply, along with many others including Jack Copeland, Daniel Dennett, Douglas Hofstadter, Jerry Fodor, John Haugeland, Ray Kurzweil and Georges Rey. Rey (1986) says the person in the room is just the CPU of the system. Kurzweil (2002) says that the human being is just an implementer and of no significance (presumably meaning that the properties of the implementer are not necessarily those of the system). Kurzweil hews to the spirit of the Turing Test and holds that if the system displays the apparent capacity to understand Chinese “it would have to, indeed, understand Chinese” – Searle is contradicting himself in saying in effect, “the machine speaks Chinese but doesn’t understand Chinese”.

Margaret Boden (1988) raises levels considerations. “Computational psychology does not credit the brain with seeing bean-sprouts or understanding English: intentional states such as these are properties of people, not of brains” (244). “In short, Searle’s description of the robot’s pseudo-brain (that is, of Searle-in-the-robot) as understanding English involves a category-mistake comparable to treating the brain as the bearer, as opposed to the causal basis, of intelligence”. Boden (1988) points out that the room operator is a conscious agent, while the CPU in a computer is not – the Chinese Room scenario asks us to take the perspective of the implementer, and not surprisingly fails to see the larger picture.

Searle’s response to the Systems Reply is simple: in principle, he could internalize the entire system, memorizing all the instructions and the database, and doing all the calculations in his head. He could then leave the room and wander outdoors, perhaps even conversing in Chinese. But he still would have no way to attach “any meaning to the formal symbols”. The man would now be the entire system, yet he still would not understand Chinese. For example, he would not know the meaning of the Chinese word for hamburger. He still cannot get semantics from syntax.

In some ways Searle’s response here anticipates later extended mind views (e.g. Clark and Chalmers 1998): if Otto, who suffers loss of memory, can regain those recall abilities by externalizing some of the information to his notebooks, then Searle arguably can do the reverse: by internalizing the instructions and notebooks he should acquire any abilities had by the extended system. And so Searle in effect concludes that since he doesn’t acquire understanding of Chinese by internalizing the external components of the entire system (e.g. he still doesn’t know what the Chinese word for hamburger means), understanding was never there in the partially externalized system of the original Chinese Room.

In his 2002 paper “The Chinese Room from a Logical Point of View”, Jack Copeland considers Searle’s response to the Systems Reply and argues that a homunculus inside Searle’s head might understand even though the room operator himself does not, just as modules in minds solve tensor equations that enable us to catch cricket balls. Copeland then turns to consider the Chinese Gym, and again appears to endorse the Systems Reply: “…the individual players [do not] understand Chinese. But there is no entailment from this to the claim that the simulation as a whole does not come to understand Chinese. The fallacy involved in moving from part to whole is even more glaring here than in the original version of the Chinese Room Argument”. Copeland denies that connectionism implies that a room of people can simulate the brain.

John Haugeland writes (2002) that Searle’s response to the Systems Reply is flawed: “…what he now asks is what it would be like if he, in his own mind, were consciously to implement the underlying formal structures and operations that the theory says are sufficient to implement another mind”. According to Haugeland, his failure to understand Chinese is irrelevant: he is just the implementer. The larger system implemented would understand – there is a level-of-description fallacy.

Shaffer 2009 examines modal aspects of the logic of the CRA and argues that familiar versions of the System Reply are question-begging. But, Shaffer claims, a modalized version of the System Reply succeeds because there are possible worlds in which understanding is an emergent property of complex syntax manipulation. Nute 2011 is a reply to Shaffer.

Stevan Harnad has defended Searle’s argument against Systems Reply critics in two papers. In his 1989 paper, Harnad writes “Searle formulates the problem as follows: Is the mind a computer program? Or, more specifically, if a computer program simulates or imitates activities of ours that seem to require understanding (such as communicating in language), can the program itself be said to understand in so doing?” (Note the specific claim: the issue is taken to be whether the program itself understands.) Harnad concludes: “On the face of it, [the CR argument] looks valid. It certainly works against the most common rejoinder, the ‘Systems Reply’….” Harnad appears to follow Searle in linking understanding and states of consciousness: Harnad 2012 (Other Internet Resources) argues that Searle shows that the core problem of conscious “feeling” requires sensory connections to the real world. (See sections below “The Robot Reply” and “Intentionality” for discussion.)

Finally some have argued that even if the room operator memorizes the rules and does all the operations inside his head, the room operator does not become the system. Cole (1984) and Block (1998) both argue that the result would not be identity of Searle with the system but much more like a case of multiple personality – distinct persons in a single head. The Chinese responding system would not be Searle, but a sub-part of him. In the CR case, one person (Searle) is an English monoglot and the other is a Chinese monoglot. The English-speaking person’s total unawareness of the meaning of the Chinese responses does not show that they are not understood. This line, of distinct persons, leads to the Virtual Mind Reply.

4.1.1 The Virtual Mind Reply

The Virtual Mind reply concedes, as does the System Reply, that the operator of the Chinese Room does not understand Chinese merely by running the paper machine. However the Virtual Mind reply holds that what is important is whether understanding is created, not whether the Room operator is the agent that understands. Unlike the Systems Reply, the Virtual Mind reply (VMR) holds that a running system may create new, virtual, entities that are distinct from both the system as a whole, as well as from the sub-systems such as the CPU or operator. In particular, a running system might create a distinct agent that understands Chinese. This virtual agent would be distinct from both the room operator and the entire system. The psychological traits, including linguistic abilities, of any mind created by artificial intelligence will depend entirely upon the program and the Chinese database, and will not be identical with the psychological traits and abilities of a CPU or the operator of a paper machine, such as Searle in the Chinese Room scenario. According to the VMR the mistake in the Chinese Room Argument is to make the claim of strong AI to be “the computer understands Chinese” or “the System understands Chinese”. The claim at issue for AI should simply be whether “the running computer creates understanding of Chinese”.

A familiar model of virtual agents are characters in computer or video games, and personal digital assistants, such as Apple’s Siri and Microsoft’s Cortana. These characters have various abilities and personalities, and the characters are not identical with the system hardware or program that creates them. A single running system might control two distinct agents, or physical robots, simultaneously, one of which converses only in Chinese and one of which can converse only in English, and which otherwise manifest very different personalities, memories, and cognitive abilities. Thus the VM reply asks us to distinguish between minds and their realizing systems.

Minsky (1980) and Sloman and Croucher (1980) suggested a Virtual Mind reply when the Chinese Room argument first appeared. In his widely-read 1989 paper “Computation and Consciousness”, Tim Maudlin considers minimal physical systems that might implement a computational system running a program. His discussion revolves around his imaginary Olympia machine, a system of buckets that transfers water, implementing a Turing machine. Maudlin’s main target is the computationalists’ claim that such a machine could have phenomenal consciousness. However in the course of his discussion, Maudlin considers the Chinese Room argument. Maudlin (citing Minsky, and Sloman and Croucher) points out a Virtual Mind reply that the agent that understands could be distinct from the physical system (414). Thus “Searle has done nothing to discount the possibility of simultaneously existing disjoint mentalities” (414–5).

Perlis (1992), Chalmers (1996) and Block (2002) have apparently endorsed versions of a Virtual Mind reply as well, as has Richard Hanley in The Metaphysics of Star Trek (1997). Penrose (2002) is a critic of this strategy, and Stevan Harnad scornfully dismisses such heroic resorts to metaphysics. Harnad defended Searle’s position in a “Virtual Symposium on Virtual Minds” (1992) against Patrick Hayes and Don Perlis. Perlis pressed a virtual minds argument derived, he says, from Maudlin. Chalmers (1996) notes that the room operator is just a causal facilitator, a “demon”, so that his states of consciousness are irrelevant to the properties of the system as a whole. Like Maudlin, Chalmers raises issues of personal identity – we might regard the Chinese Room as “two mental systems realized within the same physical space. The organization that gives rise to the Chinese experiences is quite distinct from the organization that gives rise to the demon’s [= room operator’s] experiences”(326).

Cole (1991, 1994) develops the reply and argues as follows: Searle’s argument requires that the agent of understanding be the computer itself or, in the Chinese Room parallel, the person in the room. However Searle’s failure to understand Chinese in the room does not show that there is no understanding being created. One of the key considerations is that in Searle’s discussion the actual conversation with the Chinese Room is always seriously under specified. Searle was considering Schank’s programs, which can only respond to a few questions about what happened in a restaurant, all in third person. But Searle wishes his conclusions to apply to any AI-produced responses, including those that would pass the toughest unrestricted Turing Test, i.e. they would be just the sort of conversations real people have with each other. If we flesh out the conversation in the original CR scenario to include questions in Chinese such as “How tall are you?”, “Where do you live?”, “What did you have for breakfast?”, “What is your attitude toward Mao?”, and so forth, it immediately becomes clear that the answers in Chinese are not Searle’s answers. Searle is not the author of the answers, and his beliefs and desires, memories and personality traits (apart from his industriousness!) are not reflected in the answers and in general Searle’s traits are causally inert in producing the answers to the Chinese questions. This suggests the following conditional is true: if there is understanding of Chinese created by running the program, the mind understanding the Chinese would not be the computer, whether the computer is human or electronic. The person understanding the Chinese would be a distinct person from the room operator, with beliefs and desires bestowed by the program and its database. Hence Searle’s failure to understand Chinese while operating the room does not show that understanding is not being created.

Cole (1991) offers an additional argument that the mind doing the understanding is neither the mind of the room operator nor the system consisting of the operator and the program: running a suitably structured computer program might produce answers submitted in Chinese and also answers to questions submitted in Korean. Yet the Chinese answers might apparently display completely different knowledge and memories, beliefs and desires than the answers to the Korean questions – along with a denial that the Chinese answerer knows any Korean, and vice versa. Thus the behavioral evidence would be that there were two non-identical minds (one understanding Chinese only, and one understanding Korean only). Since these might have mutually exclusive properties, they cannot be identical, and ipso facto, cannot be identical with the mind of the implementer in the room. Analogously, a video game might include a character with one set of cognitive abilities (smart, understands Chinese) as well as another character with an incompatible set (stupid, English monoglot). These inconsistent cognitive traits cannot be traits of the XBOX system that realizes them. Cole argues that the implication is that minds generally are more abstract than the systems that realize them (see Mind and Body in the Larger Philosophical Issues section).

In short, the Virtual Mind argument is that since the evidence that Searle provides that there is no understanding of Chinese was that he wouldn’t understand Chinese in the room, the Chinese Room Argument cannot refute a differently formulated equally strong AI claim, asserting the possibility of creating understanding using a programmed digital computer. Maudlin (1989) says that Searle has not adequately responded to this criticism.

Others however have replied to the VMR, including Stevan Harnad and mathematical physicist Roger Penrose. Penrose is generally sympathetic to the points Searle raises with the Chinese Room argument, and has argued against the Virtual Mind reply. Penrose does not believe that computational processes can account for consciousness, both on Chinese Room grounds, as well as because of limitations on formal systems revealed by Kurt Gödel’s incompleteness proof. (Penrose has two books on mind and consciousness; Chalmers and others have responded to Penrose’s appeals to Gödel.) In his 2002 article “Consciousness, Computation, and the Chinese Room” that specifically addresses the Chinese Room argument, Penrose argues that the Chinese Gym variation – with a room expanded to the size of India, with Indians doing the processing – shows it is very implausible to hold there is “some kind of disembodied ‘understanding’ associated with the person’s carrying out of that algorithm, and whose presence does not impinge in any way upon his own consciousness” (230–1). Penrose concludes the Chinese Room argument refutes Strong AI. Christian Kaernbach (2005) reports that he subjected the virtual mind theory to an empirical test, with negative results.

4.2 The Robot Reply

The Robot Reply concedes Searle is right about the Chinese Room scenario: it shows that a computer trapped in a computer room cannot understand language, or know what words mean. The Robot reply is responsive to the problem of knowing the meaning of the Chinese word for hamburger – Searle’s example of something the room operator would not know. It seems reasonable to hold that most of us know what a hamburger is because we have seen one, and perhaps even made one, or tasted one, or at least heard people talk about hamburgers and understood what they are by relating them to things we do know by seeing, making, and tasting. Given this is how one might come to know what hamburgers are, the Robot Reply suggests that we put a digital computer in a robot body, with sensors, such as video cameras and microphones, and add effectors, such as wheels to move around with, and arms with which to manipulate things in the world. Such a robot – a computer with a body – might do what a child does, learn by seeing and doing. The Robot Reply holds that such a digital computer in a robot body, freed from the room, could attach meanings to symbols and actually understand natural language. Margaret Boden, Tim Crane, Daniel Dennett, Jerry Fodor, Stevan Harnad, Hans Moravec and Georges Rey are among those who have endorsed versions of this reply at one time or another. The Robot Reply in effect appeals to “wide content” or “externalist semantics”. This can agree with Searle that syntax and internal connections in isolation from the world are insufficient for semantics, while holding that suitable causal connections with the world can provide content to the internal symbols.

About the time Searle was pressing the CRA, many in philosophy of language and mind were recognizing the importance of causal connections to the world as the source of meaning or reference for words and concepts. Hilary Putnam 1981 argued that a Brain in a Vat, isolated from the world, might speak or think in a language that sounded like English, but it would not be English – hence a brain in a vat could not wonder if it was a brain in a vat (because of its sensory isolation, its words “brain” and “vat” do not refer to brains or vats). The view that meaning was determined by connections with the world became widespread. Searle resisted this turn outward and continued to think of meaning as subjective and connected with consciousness.

A related view that minds are best understood as embodied or embedded in the world has gained many supporters since the 1990s, contra Cartesian solipsistic intuitions. Organisms rely on environmental features for the success of their behavior. So whether one takes a mind to be a symbol processing system, with the symbols getting their content from sensory connections with the world, or a non-symbolic system that succeeds by being embedded in a particular environment, the important of things outside the head have come to the fore. Hence many are sympathetic to some form of the Robot Reply: a computational system might understand, provided it is acting in the world. E.g Carter 2007 in a textbook on philosophy and AI concludes “The lesson to draw from the Chinese Room thought experiment is that embodied experience is necessary for the development of semantics.”

However Searle does not think that the Robot Reply to the Chinese Room argument is any stronger than the Systems Reply. All the sensors can do is provide additional input to the computer – and it will be just syntactic input. We can see this by making a parallel change to the Chinese Room scenario. Suppose the man in the Chinese Room receives, in addition to the Chinese characters slipped under the door, a stream of binary digits that appear, say, on a ticker tape in a corner of the room. The instruction books are augmented to use the numerals from the tape as input, along with the Chinese characters. Unbeknownst to the man in the room, the symbols on the tape are the digitized output of a video camera (and possibly other sensors). Searle argues that additional syntactic inputs will do nothing to allow the man to associate meanings with the Chinese characters. It is just more work for the man in the room.

Jerry Fodor, Hilary Putnam, and David Lewis, were principle architects of the computational theory of mind that Searle’s wider argument attacks. In his original 1980 reply to Searle, Fodor allows Searle is certainly right that “instantiating the same program as the brain does is not, in and of itself, sufficient for having those propositional attitudes characteristic of the organism that has the brain.” But Fodor holds that Searle is wrong about the robot reply. A computer might have propositional attitudes if it has the right causal connections to the world – but those are not ones mediated by a man sitting in the head of the robot. We don’t know what the right causal connections are. Searle commits the fallacy of inferring from “the little man is not the right causal connection” to conclude that no causal linkage would succeed. There is considerable empirical evidence that mental processes involve “manipulation of symbols”; Searle gives us no alternative explanation (this is sometimes called Fodor’s “Only Game in Town” argument for computational approaches). In the 1980s and 1990s Fodor wrote extensively on what the connections must be between a brain state and the world for the state to have intentional (representational) properties, while also emphasizing that computationalism has limits because the computations are intrinsically local and so cannot account for abductive reasoning.

In a later piece, “Yin and Yang in the Chinese Room” (in Rosenthal 1991 pp.524–525), Fodor substantially revises his 1980 view. He distances himself from his earlier version of the robot reply, and holds instead that “instantiation” should be defined in such a way that the symbol must be the proximate cause of the effect – no intervening guys in a room. So Searle in the room is not an instantiation of a Turing Machine, and “Searle’s setup does not instantiate the machine that the brain instantiates.” He concludes: “…Searle’s setup is irrelevant to the claim that strong equivalence to a Chinese speaker’s brain is ipso facto sufficient for speaking Chinese.” Searle says of Fodor’s move, “Of all the zillions of criticisms of the Chinese Room argument, Fodor’s is perhaps the most desperate. He claims that precisely because the man in the Chinese room sets out to implement the steps in the computer program, he is not implementing the steps in the computer program. He offers no argument for this extraordinary claim.” (in Rosenthal 1991, p. 525)

In a 1986 paper, Georges Rey advocated a combination of the system and robot reply, after noting that the original Turing Test is insufficient as a test of intelligence and understanding, and that the isolated system Searle describes in the room is certainly not functionally equivalent to a real Chinese speaker sensing and acting in the world. In a 2002 second look, “Searle’s Misunderstandings of Functionalism and Strong AI”, Rey again defends functionalism against Searle, and in the particular form Rey calls the “computational-representational theory of thought – CRTT”. CRTT is not committed to attributing thought to just any system that passes the Turing Test (like the Chinese Room). Nor is it committed to a conversation manual model of understanding natural language. Rather, CRTT is concerned with intentionality, natural and artificial (the representations in the system are semantically evaluable – they are true or false, hence have aboutness). Searle saddles functionalism with the “blackbox” character of behaviorism, but functionalism cares how things are done. Rey sketches “a modest mind” – a CRTT system that has perception, can make deductive and inductive inferences, makes decisions on basis of goals and representations of how the world is, and can process natural language by converting to and from its native representations. To explain the behavior of such a system we would need to use the same attributions needed to explain the behavior of a normal Chinese speaker.

If we flesh out the Chinese conversation in the context of the Robot Reply, we may again see evidence that the entity that understands is not the operator inside the room. Suppose we ask the robot system Chinese translations of “what do you see?”, we might get the answer “My old friend Shakey”, or “I see you!”. Whereas if we phone Searle in the room and ask the same questions in English we might get “These same four walls” or “these damn endless instruction books and notebooks.” Again this is evidence that we have distinct responders here, an English speaker and a Chinese speaker, who see and do quite different things. If the giant robot goes on a rampage and smashes much of Tokyo, and all the while oblivious Searle is just following the program in his notebooks in the room, Searle is not guilty of homicide and mayhem, because he is not the agent committing the acts.

Tim Crane discusses the Chinese Room argument in his 1991 book, The Mechanical Mind. He cites the Churchlands’ luminous room analogy, but then goes on to argue that in the course of operating the room, Searle would learn the meaning of the Chinese: “…if Searle had not just memorized the rules and the data, but also started acting in the world of Chinese people, then it is plausible that he would before too long come to realize what these symbols mean.”(127). (Rapaport 2006 presses an analogy between Helen Keller and the Chinese Room.) Crane appears to end with a version of the Robot Reply: “Searle’s argument itself begs the question by (in effect) just denying the central thesis of AI – that thinking is formal symbol manipulation. But Searle’s assumption, none the less, seems to me correct … the proper response to Searle’s argument is: sure, Searle-in-the-room, or the room alone, cannot understand Chinese. But if you let the outside world have some impact on the room, meaning or ‘semantics’ might begin to get a foothold. But of course, this concedes that thinking cannot be simply symbol manipulation.” (129) The idea that learning grounds understanding has led to work in developmental robotics (a.k.a. epigenetic robotics). This AI research area seeks to replicate key human learning abilities, such as robots that are shown an object from several angles while being told in natural language the name of the object.

Margaret Boden 1988 also argues that Searle mistakenly supposes programs are pure syntax. But programs bring about the activity of certain machines: “The inherent procedural consequences of any computer program give it a toehold in semantics, where the semantics in question is not denotational, but causal.” (250) Thus a robot might have causal powers that enable it to refer to a hamburger.

Stevan Harnad also finds important our sensory and motor capabilities: “Who is to say that the Turing Test, whether conducted in Chinese or in any other language, could be successfully passed without operations that draw on our sensory, motor, and other higher cognitive capacities as well? Where does the capacity to comprehend Chinese begin and the rest of our mental competence leave off?” Harnad believes that symbolic functions must be grounded in “robotic” functions that connect a system with the world. And he thinks this counts against symbolic accounts of mentality, such as Jerry Fodor’s, and, one suspects, the approach of Roger Schank that was Searle’s original target. Harnad 2012 (Other Internet Resources) argues that the CRA shows that even with a robot with symbols grounded in the external world, there is still something missing: feeling, such as the feeling of understanding.

However Ziemke 2016 argues a robotic embodiment with layered systems of bodily regulation may ground emotion and meaning, and Seligman 2019 argues that “perceptually grounded” approaches to natural language processing (NLP) have the “potential to display intentionality, and thus after all to foster a truly meaningful semantics that, in the view of Searle and other skeptics, is intrinsically beyond computers’ capacity.”

4.3 The Brain Simulator Reply

Consider a computer that operates in quite a different manner than the usual AI program with scripts and operations on sentence-like strings of symbols. The Brain Simulator reply asks us to suppose instead the program simulates the actual sequence of nerve firings that occur in the brain of a native Chinese language speaker when that person understands Chinese – every nerve, every firing. Since the computer then works the very same way as the brain of a native Chinese speaker, processing information in just the same way, it will understand Chinese. Paul and Patricia Churchland have set out a reply along these lines, discussed below.

In response to this, Searle argues that it makes no difference. He suggests a variation on the brain simulator scenario: suppose that in the room the man has a huge set of valves and water pipes, in the same arrangement as the neurons in a native Chinese speaker’s brain. The program now tells the man which valves to open in response to input. Searle claims that it is obvious that there would be no understanding of Chinese. (Note however that the basis for this claim is no longer simply that Searle himself wouldn’t understand Chinese – it seems clear that now he is just facilitating the causal operation of the system and so we rely on our Leibnizian intuition that water-works don’t understand (see also Maudlin 1989).) Searle concludes that a simulation of brain activity is not the real thing.

However, following Pylyshyn 1980, Cole and Foelber 1984, Chalmers 1996, we might wonder about hybrid systems. Pylyshyn writes:

If more and more of the cells in your brain were to be replaced by integrated circuit chips, programmed in such a way as to keep the input-output function each unit identical to that of the unit being replaced, you would in all likelihood just keep right on speaking exactly as you are doing now except that you would eventually stop meaning anything by it. What we outside observers might take to be words would become for you just certain noises that circuits caused you to make.

These cyborgization thought experiments can be linked to the Chinese Room. Suppose Otto has a neural disease that causes one of the neurons in my brain to fail, but surgeons install a tiny remotely controlled artificial neuron, a synron, along side his disabled neuron. The control of Otto’s neuron is by John Searle in the Chinese Room, unbeknownst to both Searle and Otto. Tiny wires connect the artificial neuron to the synapses on the cell-body of his disabled neuron. When his artificial neuron is stimulated by neurons that synapse on his disabled neuron, a light goes on in the Chinese Room. Searle then manipulates some valves and switches in accord with a program. That, via the radio link, causes Otto’s artificial neuron to release neuro-transmitters from its tiny artificial vesicles. If Searle’s programmed activity causes Otto’s artificial neuron to behave just as his disabled natural neuron once did, the behavior of the rest of his nervous system will be unchanged. Alas, Otto’s disease progresses; more neurons are replaced by synrons controlled by Searle. Ex hypothesi the rest of the world will not notice the difference; will Otto? If so, when? And why?

Under the rubric “The Combination Reply”, Searle also considers a system with the features of all three of the preceding: a robot with a digital brain simulating computer in its cranium, such that the system as a whole behaves indistinguishably from a human. Since the normal input to the brain is from sense organs, it is natural to suppose that most advocates of the Brain Simulator Reply have in mind such a combination of brain simulation, Robot, and Systems Reply. Some (e.g. Rey 1986) argue it is reasonable to attribute intentionality to such a system as a whole. Searle agrees that it would indeed be reasonable to attribute understanding to such an android system – but only as long as you don’t know how it works. As soon as you know the truth – it is a computer, uncomprehendingly manipulating symbols on the basis of syntax, not meaning – you would cease to attribute intentionality to it.

(One assumes this would be true even if it were one’s spouse, with whom one had built a life-long relationship, that was revealed to hide a silicon secret. Science fiction stories, including episodes of Rod Serling’s television series The Twilight Zone, have been based on such possibilities (the face of the beloved peels away to reveal the awful android truth); however, Steven Pinker (1997) mentions one episode in which the android’s secret was known from the start, but the protagonist developed a romantic relationship with the android.)

On its tenth anniversary the Chinese Room argument was featured in the general science periodical Scientific American. Leading the opposition to Searle’s lead article in that issue were philosophers Paul and Patricia Churchland. The Churchlands agree with Searle that the Chinese Room does not understand Chinese, but hold that the argument itself exploits our ignorance of cognitive and semantic phenomena. They raise a parallel case of “The Luminous Room” where someone waves a magnet and argues that the absence of resulting visible light shows that Maxwell’s electromagnetic theory is false. The Churchlands advocate a view of the brain as a connectionist system, a vector transformer, not a system manipulating symbols according to structure-sensitive rules. The system in the Chinese Room uses the wrong computational strategies. Thus they agree with Searle against traditional AI, but they presumably would endorse what Searle calls “the Brain Simulator Reply”, arguing that, as with the Luminous Room, our intuitions fail us when considering such a complex system, and it is a fallacy to move from part to whole: “… no neuron in my brain understands English, although my whole brain does.”

In his 1991 book, Microcognition. Andy Clark holds that Searle is right that a computer running Schank’s program does not know anything about restaurants, “at least if by ‘know’ we mean anything like ‘understand’”. But Searle thinks that this would apply to any computational model, while Clark, like the Churchlands, holds that Searle is wrong about connectionist models. Clark’s interest is thus in the brain-simulator reply. The brain thinks in virtue of its physical properties. What physical properties of the brain are important? Clark answers that what is important about brains are “variable and flexible substructures” which conventional AI systems lack. But that doesn’t mean computationalism or functionalism is false. It depends on what level you take the functional units to be. Clark defends “microfunctionalism” – one should look to a fine-grained functional description, e.g. neural net level. Clark cites William Lycan approvingly contra Block’s absent qualia objection – yes, there can be absent qualia, if the functional units are made large. But that does not constitute a refutation of functionalism generally. So Clark’s views are not unlike the Churchlands’, conceding that Searle is right about Schank and symbolic-level processing systems, but holding that he is mistaken about connectionist systems.

Similarly Ray Kurzweil (2002) argues that Searle’s argument could be turned around to show that human brains cannot understand – the brain succeeds by manipulating neurotransmitter concentrations and other mechanisms that are in themselves meaningless. In criticism of Searle’s response to the Brain Simulator Reply, Kurzweil says: “So if we scale up Searle’s Chinese Room to be the rather massive ‘room’ it needs to be, who’s to say that the entire system of a hundred trillion people simulating a Chinese Brain that knows Chinese isn’t conscious? Certainly, it would be correct to say that such a system knows Chinese. And we can’t say that it is not conscious anymore than we can say that about any other process. We can’t know the subjective experience of another entity….”

4.4 The Other Minds Reply

Related to the preceding is The Other Minds Reply: “How do you know that other people understand Chinese or anything else? Only by their behavior. Now the computer can pass the behavioral tests as well as they can (in principle), so if you are going to attribute cognition to other people you must in principle also attribute it to computers.”

Searle’s (1980) reply to this is very short:

The problem in this discussion is not about how I know that other people have cognitive states, but rather what it is that I am attributing to them when I attribute cognitive states to them. The thrust of the argument is that it couldn’t be just computational processes and their output because the computational processes and their output can exist without the cognitive state. It is no answer to this argument to feign anesthesia. In ‘cognitive sciences’ one presupposes the reality and knowability of the mental in the same way that in physical sciences one has to presuppose the reality and knowability of physical objects.

Critics hold that if the evidence we have that humans understand is the same as the evidence we might have that a visiting extra-terrestrial alien understands, which is the same as the evidence that a robot understands, the presuppositions we may make in the case of our own species are not relevant, for presuppositions are sometimes false. For similar reasons, Turing, in proposing the Turing Test, is specifically worried about our presuppositions and chauvinism. If the reasons for the presuppositions regarding humans are pragmatic, in that they enable us to predict the behavior of humans and to interact effectively with them, perhaps the presupposition could apply equally to computers (similar considerations are pressed by Dennett, in his discussions of what he calls the Intentional Stance).

Searle raises the question of just what we are attributing in attributing understanding to other minds, saying that it is more than complex behavioral dispositions. For Searle the additional seems to be certain states of consciousness, as is seen in his 2010 summary of the CRA conclusions. Terry Horgan (2013) endorses this claim: “the real moral of Searle’s Chinese room thought experiment is that genuine original intentionality requires the presence of internal states with intrinsic phenomenal character that is inherently intentional…” But this tying of understanding to phenomenal consciousness raises a host of issues.

We attribute limited understanding of language to toddlers, dogs, and other animals, but it is not clear that we are ipso facto attributing unseen states of subjective consciousness – what do we know of the hidden states of exotic creatures? Ludwig Wittgenstein (the Private Language Argument) and his followers pressed similar points. Altered qualia possibilities, analogous to the inverted spectrum, arise: suppose I ask “what’s the sum of 5 and 7” and you respond “the sum of 5 and 7 is 12”, but as you heard my question you had the conscious experience of hearing and understanding “what is the sum of 10 and 14”, though you were in the computational states appropriate for producing the correct sum and so said “12”. Are there certain conscious states that are “correct” for certain functional states? Wittgenstein’s considerations appear to be that the subjective state is irrelevant, at best epiphenomenal, if a language user displays appropriate linguistic behavior. Afterall, we are taught language on the basis of our overt responses, not our qualia. The mathematical savant Daniel Tammet reports that when he generates the decimal expansion of pi to thousands of digits he experiences colors that reveal the next digit, but even here it may be that Tennant’s performance is likely not produced by the colors he experiences, but rather by unconscious neural computation. The possible importance of subjective states is further considered in the section on Intentionality, below.

In the 30 years since the CRA there has been philosophical interest in zombies – creatures that look like and behave just as normal humans, including linguistic behavior, yet have no subjective consciousness. A difficulty for claiming that subjective states of consciousness are crucial for understanding meaning will arise in these cases of absent qualia: we can’t tell the difference between zombies and non-zombies, and so on Searle’s account we can’t tell the difference between those that really understand English and those that don’t. And if you and I can’t tell the difference between those who understand language and Zombies who behave like they do but don’t really, than neither can any selection factor in the history of human evolution – to predators, prey, and mates, zombies and true understanders, with the “right” conscious experience, have been indistinguishable. But then there appears to be a distinction without a difference. In any case, Searle’s short reply to the Other Minds Reply may be too short.

Descartes famously argued that speech was sufficient for attributing minds and consciousness to others, and infamously argued that it was necessary. Turing was in effect endorsing Descartes’ sufficiency condition, at least for intelligence, while substituting written for oral linguistic behavior. Since most of us use dialog as a sufficient condition for attributing understanding, Searle’s argument, which holds that speech is a sufficient condition for attributing understanding to humans but not for anything that doesn’t share our biology, an account would appear to be required of what additionally is being attributed, and what can justify the additional attribution. Further, if being con-specific is key on Searle’s account, a natural question arises as to what circumstances would justify us in attributing understanding (or consciousness) to extra-terrestrial aliens who do not share our biology? Offending ET’s by withholding attributions of understanding until after doing a post-mortem may be risky.

Hans Moravec, director of the Robotics laboratory at Carnegie Mellon University, and author of Robot: Mere Machine to Transcendent Mind, argues that Searle’s position merely reflects intuitions from traditional philosophy of mind that are out of step with the new cognitive science. Moravec endorses a version of the Other Minds reply. It makes sense to attribute intentionality to machines for the same reasons it makes sense to attribute them to humans; his “interpretative position” is similar to the views of Daniel Dennett. Moravec goes on to note that one of the things we attribute to others is the ability to make attributions of intentionality, and then we make such attributions to ourselves. It is such self-representation that is at the heart of consciousness. These capacities appear to be implementation independent, and hence possible for aliens and suitably programmed computers.

As we have seen, the reason that Searle thinks we can disregard the evidence in the case of robots and computers is that we know that their processing is syntactic, and this fact trumps all other considerations. Indeed, Searle believes this is the larger point that the Chinese Room merely illustrates. This larger point is addressed in the Syntax and Semantics section below.

4.5 The Intuition Reply

Many responses to the Chinese Room argument have noted that, as with Leibniz’ Mill, the argument appears to be based on intuition: the intuition that a computer (or the man in the room) cannot think or have understanding. For example, Ned Block (1980) in his original BBS commentary says “Searle’s argument depends for its force on intuitions that certain entities do not think.” But, Block argues, (1) intuitions sometimes can and should be trumped and (2) perhaps we need to bring our concept of understanding in line with a reality in which certain computer robots belong to the same natural kind as humans. Similarly Margaret Boden (1988) points out that we can’t trust our untutored intuitions about how mind depends on matter; developments in science may change our intuitions. Indeed, elimination of bias in our intuitions was precisely what motivated Turing (1950) to propose the Turing Test, a test that was blind to the physical character of the system replying to questions. Some of Searle’s critics in effect argue that he has merely pushed the reliance on intuition back, into the room.

For example, one can hold that despite Searle’s intuition that he would not understand Chinese while in the room, perhaps he is mistaken and does, albeit unconsciously. Hauser (2002) accuses Searle of Cartesian bias in his inference from “it seems to me quite obvious that I understand nothing” to the conclusion that I really understand nothing. Normally, if one understands English or Chinese, one knows that one does – but not necessarily. Searle lacks the normal introspective awareness of understanding – but this, while abnormal, is not conclusive.

Critics of the CRA note that our intuitions about intelligence, understanding and meaning may all be unreliable. With regard to meaning, Wakefield 2003, following Block 1998, defends what Wakefield calls “the essentialist objection” to the CRA, namely that a computational account of meaning is not analysis of ordinary concepts and their related intuitions. Rather we are building a scientific theory of meaning that may require revising our intuitions. As a theory, it gets its evidence from its explanatory power, not its accord with pre-theoretic intuitions (however Wakefield himself argues that computational accounts of meaning are afflicted by a pernicious indeterminacy (pp. 308ff)).

Other critics focusing on the role of intuitions in the CRA argue that our intuitions regarding both intelligence and understanding may also be unreliable, and perhaps incompatible even with current science. With regard to understanding, Steven Pinker, in How the Mind Works (1997), holds that “… Searle is merely exploring facts about the English word understand…. People are reluctant to use the word unless certain stereotypical conditions apply…” But, Pinker claims, nothing scientifically speaking is at stake. Pinker objects to Searle’s appeal to the “causal powers of the brain” by noting that the apparent locus of the causal powers is the “patterns of interconnectivity that carry out the right information processing”. Pinker ends his discussion by citing a science fiction story in which Aliens, anatomically quite unlike humans, cannot believe that humans think when they discover that our heads are filled with meat. The Aliens’ intuitions are unreliable – presumably ours may be so as well.

Clearly the CRA turns on what is required to understand language. Schank 1978 clarifies his claim about what he thinks his programs can do: “By ‘understand’, we mean SAM [one of his programs] can create a linked causal chain of conceptualizations that represent what took place in each story.” This is a nuanced understanding of “understanding”, whereas the Chinese Room thought experiment does not turn on a technical understanding of “understanding”, but rather intuitions about our ordinary competence when we understand a word like “hamburger”. Indeed by 2015 Schank distances himself from weak senses of “understand”, holding that no computer can “understand when you tell it something”, and that IBM’s WATSON “doesn’t know what it is saying”. Schank’s program may get links right, but arguably does not know what the linked entities are. Whether it does or not depends on what concepts are, see section 5.1. Furthermore it is possible that when it comes to attributing understanding of language we have different standards for different things – more relaxed for dogs and toddlers. Some things understand a language “un poco”. Searle (1980)concedes that there are degrees of understanding, but says that all that matters that there are clear cases of no understanding, and AI programs are an example: “The computer understanding is not just (like my understanding of German) partial or incomplete; it is zero.”

Some defenders of AI are also concerned with how our understanding of understanding bears on the Chinese Room argument. In their paper “A Chinese Room that Understands” AI researchers Simon and Eisenstadt (2002) argue that whereas Searle refutes “logical strong AI”, the thesis that a program that passes the Turing Test will necessarily understand, Searle’s argument does not impugn “Empirical Strong AI” – the thesis that it is possible to program a computer that convincingly satisfies ordinary criteria of understanding. They hold however that it is impossible to settle these questions “without employing a definition of the term ‘understand’ that can provide a test for judging whether the hypothesis is true or false”. They cite W.V.O. Quine’s Word and Object as showing that there is always empirical uncertainty in attributing understanding to humans. The Chinese Room is a Clever Hans trick (Clever Hans was a horse who appeared to clomp out the answers to simple arithmetic questions, but it was discovered that Hans could detect unconscious cues from his trainer). Similarly, the man in the room doesn’t understand Chinese, and could be exposed by watching him closely. (Simon and Eisenstadt do not explain just how this would be done, or how it would affect the argument.) Citing the work of Rudolf Carnap, Simon and Eisenstadt argue that to understand is not just to exhibit certain behavior, but to use “intensions” that determine extensions, and that one can see in actual programs that they do use appropriate intensions. They discuss three actual AI programs, and defend various attributions of mentality to them, including understanding, and conclude that computers understand; they learn “intensions by associating words and other linguistic structure with their denotations, as detected through sensory stimuli”. And since we can see exactly how the machines work, “it is, in fact, easier to establish that a machine exhibits understanding that to establish that a human exhibits understanding….” Thus, they conclude, the evidence for empirical strong AI is overwhelming.